AI Outperforms Humans in Most Performance Benchmarks

Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) has published the seventh edition of its extensive AI Index report, authored by a diverse group of academic and industry specialists.

This latest edition, more comprehensive than its predecessors, underscores the swift advancement and increasing relevance of AI in our daily lives. It delves into various topics, from the sectors leveraging AI most to countries most concerned about job displacement due to AI. However, a standout finding from the report is AI’s performance compared to humans.

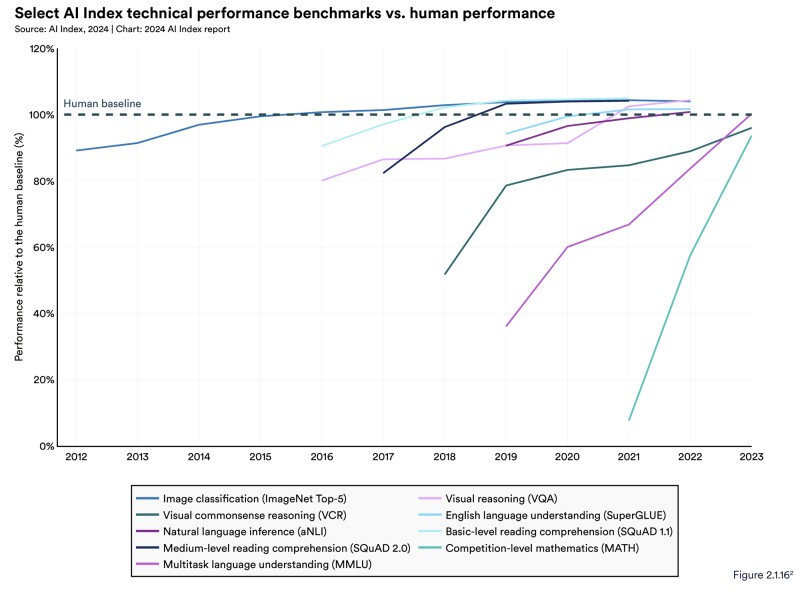

For those who haven’t been keeping up, AI has already outperformed us in a surprisingly wide range of significant tasks. It began with image classification in 2015, followed by basic reading comprehension in 2017, visual reasoning in 2020, and natural language inference in 2021.

Adapting to AI’s Rapid Progress

The pace at which AI is advancing is remarkable, rendering many existing benchmarks outdated. Researchers in the field are now racing to create new, more complex benchmarks. In essence, AIs are becoming so adept at passing tests that we now need new evaluations—not to assess competence, but to identify areas where humans still excel and where we maintain an edge.

It’s important to mention that the following results are based on these potentially outdated benchmarks. However, the overarching trend remains unmistakable:

AI Index 2024

Examine those trends, particularly the nearly vertical line in the most recent tests. Keep in mind, these machines are essentially in their infancy.

The latest AI Index report highlights that in 2023, AI still faced difficulties with intricate cognitive tasks such as advanced math problem-solving and visual commonsense reasoning.

However, describing this as ‘struggling’ could be misleading; it doesn’t imply poor performance.

AI’s Remarkable Progress in Solving Complex Math Problems

On the MATH dataset, comprising 12,500 challenging math problems at competition level, AI’s performance has seen a remarkable improvement since its inception. In 2021, AI systems could only solve 6.9% of these problems. In contrast, by 2023, a model based on GPT-4 successfully solved 84.3%. The human baseline stands at 90%.

And we’re not referring to the average person; we’re talking about individuals capable of solving questions like these:

Hendryks et al./AI Index 2024

That’s the state of advanced math as of 2024, and we’re still in the early stages of the AI era.

Visual Commonsense Reasoning (VCR)

Then there’s visual commonsense reasoning (VCR). Unlike basic object recognition, VCR evaluates how AI utilizes commonsense knowledge in visual scenarios to make predictions.

For instance, when presented with an image of a cat on a table, an AI equipped with VCR should anticipate that the cat might leap off the table or recognize that the table can support its weight.

The report revealed a 7.93% improvement in VCR from 2022 to 2023, reaching a score of 81.60, compared to the human baseline of 85.

Zellers et al./AI Index 2024

Five years ago, the idea of presenting a computer with an image and expecting it to grasp the context well enough to provide an answer would have seemed far-fetched.

Today, AI produces written content across various fields. However, despite significant advancements, large language models (LLMs) still sometimes produce what’s euphemistically termed as ‘hallucinations.’ This essentially means that they can occasionally present incorrect or misleading information as if it were factual.

The Risks of Over-Reliance on AI

A notable incident illustrating this occurred last year involving Steven Schwartz, a New York attorney who relied on ChatGPT for legal research without verifying the information. The judge overseeing the case identified fabricated legal cases in the documents generated by the AI and fined Schwartz $5,000 (AU$7,750) for his oversight. This incident gained global attention.

HaluEval serves as a benchmark to evaluate these hallucination tendencies. The tests revealed that many LLMs still grapple with this issue.

Generative AI also faces challenges in ensuring truthfulness. The latest AI Index report used TruthfulQA as a metric to assess the accuracy of LLMs. This benchmark consists of 817 questions covering topics like health, law, finance, and politics, aiming to test and correct common misconceptions that humans often have.

GPT-4, launched in early 2024, scored the highest on this benchmark with a score of 0.59, nearly three times better than a GPT-2-based model evaluated in 2021. This significant improvement suggests that LLMs are steadily advancing in providing accurate responses.

As for AI-generated images, to appreciate the remarkable progress in text-to-image generation, one can look at Midjourney’s attempts at illustrating Harry Potter since 2022:

Midjourney/AI Index 2024

That represents nearly two years of AI advancement. How much time do you think a human artist would need to achieve comparable proficiency?

The Holistic Evaluation of Text-to-Image Models (HEIM) was employed to benchmark LLMs on their text-to-image generation skills, focusing on 12 critical aspects essential for the practical application of images.

Evaluating AI-Generated Images

Human evaluators assessed the generated images and found that no single model excelled in every criterion. OpenAI’s DALL-E 2 performed best in image-to-text alignment, meaning how well the image matched the provided text. The Stable Diffusion-based Dreamlike Photoreal model ranked highest in terms of quality (how closely it resembled a photo), aesthetics (visual appeal), and originality.

Next year’s report promises to be incredibly exciting.

It’s worth noting that this AI Index Report concludes at the end of 2023, a year marked by intense AI advancements and rapid progress. However, 2024 has proven even more eventful, witnessing the introduction of groundbreaking developments like Suno, Sora, Google Genie, Claude 3, Channel 1, and Devin.

Each of these innovations, along with several others, holds the potential to fundamentally transform entire industries. Additionally, looming over all these advancements is the enigmatic presence of GPT-5, which could potentially be such a comprehensive and versatile model that it might overshadow all others.

AI is undoubtedly here to stay. The swift pace of technological advancements observed throughout 2023, as highlighted in this report, indicates that AI will continue to advance, narrowing the gap between humans and technology.

We understand this is quite a bit to take in, but there’s more to explore. The report delves into the challenges accompanying AI’s progression and its impact on the global perception of its safety, reliability, and ethics. Keep an eye out for the second part of this series, coming soon!

Read the original article on: New Atlas

Read more: Could Artificial Intelligence Bring About the End of Civilization?