The Camera Precisely Captures the Animal Perspective with 99% Accuracy

It’s simple to overlook that the majority of animals perceive the world differently from humans. In reality, due to their ability to see infrared and ultraviolet light, many animals encounter a world that remains entirely hidden from our sight.

Presently, researchers have created both hardware and software enabling the recording of footage as though it were captured through the vision of animals like honeybees and birds.

It presents a captivating and revealing perspective on nature and animal behavior, with researchers from the University of Sussex and the Hanley Color Lab at George Mason University anticipating a broad range of applications. Recognizing its potential, they have released the software as open-source, inviting everyone from nature documentary producers and ecologists to outdoor enthusiasts and bird-watchers to explore the unique visual realities of these animals.

Unveiling the Dynamic World of Animal Vision

Senior author Daniel Hanley expressed the team’s enduring fascination with how animals perceive the world. While modern techniques in sensory ecology enable insights into how static scenes might appear to animals, crucial decisions often revolve around dynamic elements, such as detecting food or assessing a potential mate. The introduced hardware and software tools are designed to capture and display animal-perceived colors in motion, benefiting both ecologists and filmmakers.

The composition of our eyes’ photoreceptors, along with biological components like cones and rods, dictates our vision capabilities, including color and depth perception. Some animals, like vampire bats and mosquitoes, can detect infrared (IR) light, while butterflies and certain birds can see ultraviolet (UV) light—both outside the visible spectrum for humans.

This variance in vision poses challenges for understanding animal behavior and assessing our inadvertent impact on their ability to communicate, find food, shelter, or a mate. Current methods, such as spectrophotometry, have limitations—they are time-consuming, dependent on specific lighting conditions, and unable to capture moving images, hindering our ability to comprehend their perspective fully.

Capturing the World Through Animal Eyes

Herein lies the distinction in the researchers’ innovative approach. They have meticulously designed a tool utilizing multispectral photography, capable of capturing light across various wavelengths, including those in the infrared (IR) and ultraviolet (UV) ranges. The camera records videos in four color channels – blue, green, red, and UV – and subsequently processes them to produce footage that simulates the visual experience through the eyes of a specific animal, taking into account our understanding of their eye receptors.

Vasas et al/PLOS Biology/(CC0 1.0)

Separating UV and Visible Light

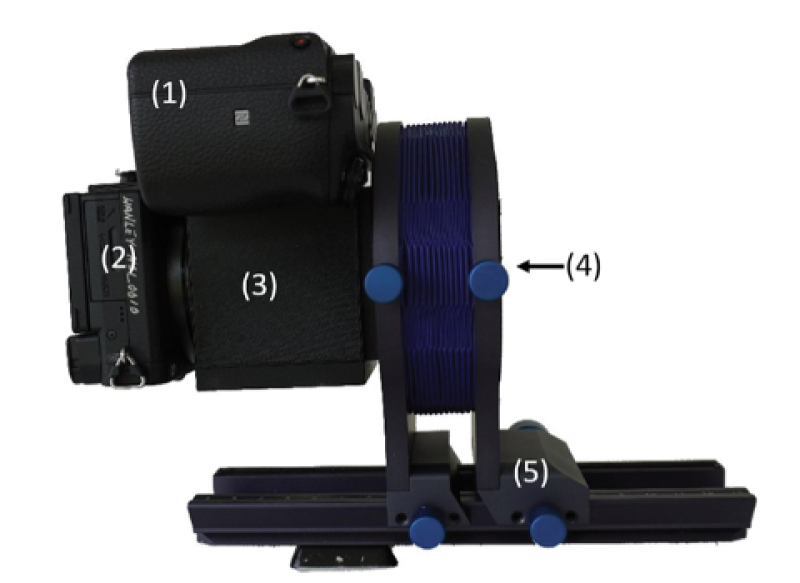

The team devised a portable 3D-printed device housing a beam splitter that separates ultraviolet (UV) from visible light, each captured by a dedicated camera. The UV-sensitive camera alone does not record perceptible data, but when combined with the other camera, they jointly capture high-quality video. Algorithms align the footage and present visuals from the perspective of various animals’ sight, demonstrating an average accuracy of 92%, with some tests yielding 99% positive results.

However, the hardware is designed to be compatible with commercially available cameras, and the researchers have shared the software as open-source, hoping others will adapt it for their specific wildlife filming requirements.

Despite limitations such as the inability to capture polarized light and a restricted frame rate, making it challenging for fast-moving subjects, the system provides unique insights to enhance our comprehension of animal behavior and guide us in mitigating our impact on the natural world.

And regarding the footage?

The team recorded a museum specimen of a Phoebis philea butterfly using avian receptor noise-limited (RNL) false colors. The researchers pointed out: “Another possible application of the system is the rapid digitization of museum specimens. This butterfly exhibits UV coloration through both pigments and structures. Vivid magenta hues emphasize the areas predominantly reflecting UV light, while purple regions reflect similar amounts of UV and long-wavelength light. The specimen is positioned on a stand and rotated slowly, illustrating the dynamic changes in iridescent colors based on the viewing angle.

An anti-predator display by a caterpillar as seen in the vision of Apis (bee).

Spectral Challenges and Aposematic Signals

The researchers remarked, “Conceal and reveal displays can present challenges for spectroscopy and standard multispectral photography.” They presented a video featuring a black swallowtail Papilio polyxenes caterpillar exhibiting its osmeteria. The video was rendered in honeybee false colors, where UV, blue, and green quantum catches are represented as blue, green, and red, respectively. The human-perceived yellow osmeteria and yellow spots on the caterpillar’s back, both strongly reflecting in the UV, appear magenta in honeybee false colors (as the robust responses on the honeybee’s UV-sensitive and green-sensitive photoreceptors are depicted as blue and red, respectively). Given that many caterpillar predators perceive UV, this coloration may serve as an effective aposematic signal.

Bees engaging in foraging and interactions on flowers as observed in Apis vision. The researchers highlighted, “The camera system has the capability to document naturally occurring behaviors in their authentic settings. This is demonstrated through three brief clips showcasing bees foraging (first and second clips) and engaging in a fight (third clip) in their natural environment. The videos are presented in honeybee false colors, with the honeybee’s UV, blue, and green photoreceptor responses depicted as blue, green, and red, respectively.”

Lastly, an iridescent peacock feather viewed through the eyes of four different animals: its own species (peafowl), humans, honeybees, and dogs.

Varied Perceptions Across Species

To conclude, the researchers clarified, “The camera system is capable of measuring angle-dependent structural colors, including iridescence. This is demonstrated in a video featuring a highly iridescent peacock (Pavo cristatus) feather. The colors in this video are represented as (A) peafowl Pavo cristatus false color, where blue, green, and red quantum catches are shown as blue, green, and red, respectively, and UV is superimposed as magenta. While resembling a standard color video in many aspects, the UV-iridescence (highlighted in the video at approximately five seconds) is observable on the blue-green barbs of the ocellus (“eyespot”). Additional UV iridescence is evident along the perimeter of the ocellus, situated between the outer two green stripes. Intriguingly, the peafowl perceives the iridescence more prominently than (B) humans (standard colors), (C) honeybees, or (D) dogs.”

Read the original article on: New atlas

Read more: Scientists Aid in Uncovering the Neural Mechanisms of Vision